Today’s GenAI arms race is fought with novel chip architectures and packaging. Specialized hardware designs are proliferating in the form of GPUs, TPUs, NPUs, and more, all tuned for parallelism and matrix-heavy AI math.

In this hyper-competitive landscape, chip vendors scramble to differentiate their products on multiple fronts. They promise some mix of better performance, efficiency, or scalability, but the specific strategies vary widely:

Some chipmakers aim to outgun the competition with sheer performance. Flagship GPUs, for example, focus on FLOPS and huge memory throughput. While memory is a critical factor in GenAI performance, this paper focuses on compute throughput bottlenecks.

One approach that chipmakers employ to win this category is advanced packaging, connecting multiple silicon chiplets in a single heterogeneous device to increase performance density.

Even a 10% speed improvement will have a profound impact due to the immense scale. For example, training a model like LLaMA 3.1 405B involved 16,000 GPUs, consumed approximately 27 megawatts, and required an estimated 40 billion PFLOPS [23]. That level of optimization can reduce training time by several weeks and eliminate the need for thousands of GPU-days, translating to millions of dollars in infrastructure savings.

In large-scale AI inference operations, even modest throughput enhancements can lead to significant cost reductions. For instance, OpenAI's GPT-4 processes approximately 50 billion queries annually, incurring an estimated $144 million in compute costs [24]. Implementing a 10% throughput improvement could decrease the number of required servers, resulting in an estimated $14.4 million in annual savings.

Throughput optimization also reduces inference latency, which is a critical factor in user experience. For example, the response time of OpenAI's GPT-4 model has been measured at approximately 196 milliseconds per generated token [25]. Enhancing throughput by 10% could proportionally reduce this latency, leading to faster response times and improved user satisfaction.

Performance improvements typically begin with design-time architecture exploration and RTL optimization, such as pipeline depth, compute unit allocation, and dataflow design. On top of that, chipmakers apply techniques like standard Adaptive Frequency Scaling (AFS) to push efficiency under dynamic conditions in the field.

However, these runtime methods are generally static and not workload-aware, leading to suboptimal performance in real-world deployments. Frequency scaling is also done conservatively to preserve thermal and functional stability. While these approaches help extract more performance within safe limits, they may fall short of what GenAI workloads demand.

GenAI’s exponential growth in computational requirements urges chipmakers to pay closer attention to power consumption. Beyond immediate consequences, such as thermal problems, excessive wattage has severe implications for customers’ operational costs.

As a consequence, design wins increasingly revolve around Total Cost of Ownership (TCO). This metric factors in not only the upfront hardware cost but also ongoing expenses like power, cooling, and infrastructure. Solutions that deliver more compute per watt can significantly reduce TCO and make large-scale AI deployments more sustainable.

Furthermore, reducing the power consumption of individual devices directly expands infrastructure performance. Every watt saved per chip frees up headroom within the data center’s fixed power budget, enabling higher system utilization across the fleet.

This power reduction allows operators to run more workloads, serve more users, or deploy additional systems without breaching energy limits. Improving PPW at the chip level becomes a strategic lever for maximizing performance within existing power constraints.

To explore how this dynamic plays out across real data center deployments, read the full blog post here.

Power efficiency is typically optimized through a combination of design-time techniques and runtime control. Clock gating, power gating, and multi-voltage domains are widely used at the architecture and implementation levels to reduce dynamic and leakage power.

At runtime, methods like Dynamic Voltage and Frequency Scaling (DVFS) and Adaptive Voltage Scaling (AVS) are applied to adjust power consumption based on static models or basic telemetry, such as temperature or process variation. These standard techniques are not workload-aware and typically apply uniform guard bands across all chips to ensure stability across all devices and workloads.

As a result, they leave significant excess guard bands that cause unnecessary power consumption, undermining PPW. This inefficiency calls for more precise, real-time approaches that optimize power without compromising performance or reliability.

A chip’s reliability at large scales is just as critical as its raw performance. DPPM measures the fraction of chips that exhibit failures post-manufacturing, directly impacting system uptime and operational costs. While semiconductor testing filters out detectable defects, latent issues stay hidden until real workloads expose them. As GenAI compute infrastructure scales to millions of deployed chips, even a low DPPM might translate to frequent failures with substantial consequences.

Furthermore, Silent Data Corruption (SDC) has emerged as a critical reliability threat to scaling GenAI training, as it corrupts computations without triggering alerts. Unlike memory bit flips, for example, mitigated by error correction codes (ECC), SDCs originate from subtle timing violations, aging effects, or marginal defects that escape standard semiconductor testing.

These errors leave no trace, yet a single one can distort model weights across interdependent nodes, quietly derailing a training run that may span weeks, involve over 25,000 GPUs, and cost more than $100 million [12]. In training clusters, even a single faulty processor can jeopardize the entire job. These workloads run across tightly coupled systems, each contributing to shared model parameters. If one chip introduces a silent error during synchronization, that corruption spreads throughout the cluster.

Download White Paper: Outsmarting Silent Data Corruption in AI Processors with Two-Stage Detection

Ensuring reliability has traditionally relied on periodic field testing to uncover potential failures. While effective for basic quality assurance, these methods may miss latent defects, workload-driven faults, accelerated aging, and SDCs. They are also time-consuming and difficult to streamline within data center environments running high-intensity GenAI. The limitations of these offline techniques point to the need for continuous, in-situ monitoring to maintain reliability at hyperscale.

Despite these diverse optimization strategies, all chipmakers share a common challenge. They must set conservative operating guard bands to ensure reliability. This necessity presents an overlooked opportunity for significant optimization that can shape who wins the GenAI race.

proteanTecs Real-Time Monitoring for Scalable GenAI Chips

As GenAI chips reach unprecedented levels of complexity, traditional design-time assumptions and static controls are no longer enough. Standard runtime methods such as AVS, DVFS, and AFS are static and rely on conservative guard bands. These approaches waste power, limit throughput, and fail to detect real-time reliability issues.

What chipmakers need is visibility into how each chip behaves under actual workloads. Not just design-time guard bands or environmental telemetry, but in-situ insights into timing margins, aging, and stress.

proteanTecs closes this critical gap by enabling a new class of in-chip applications that optimize each chip by tuning it in real time according to actual workloads.

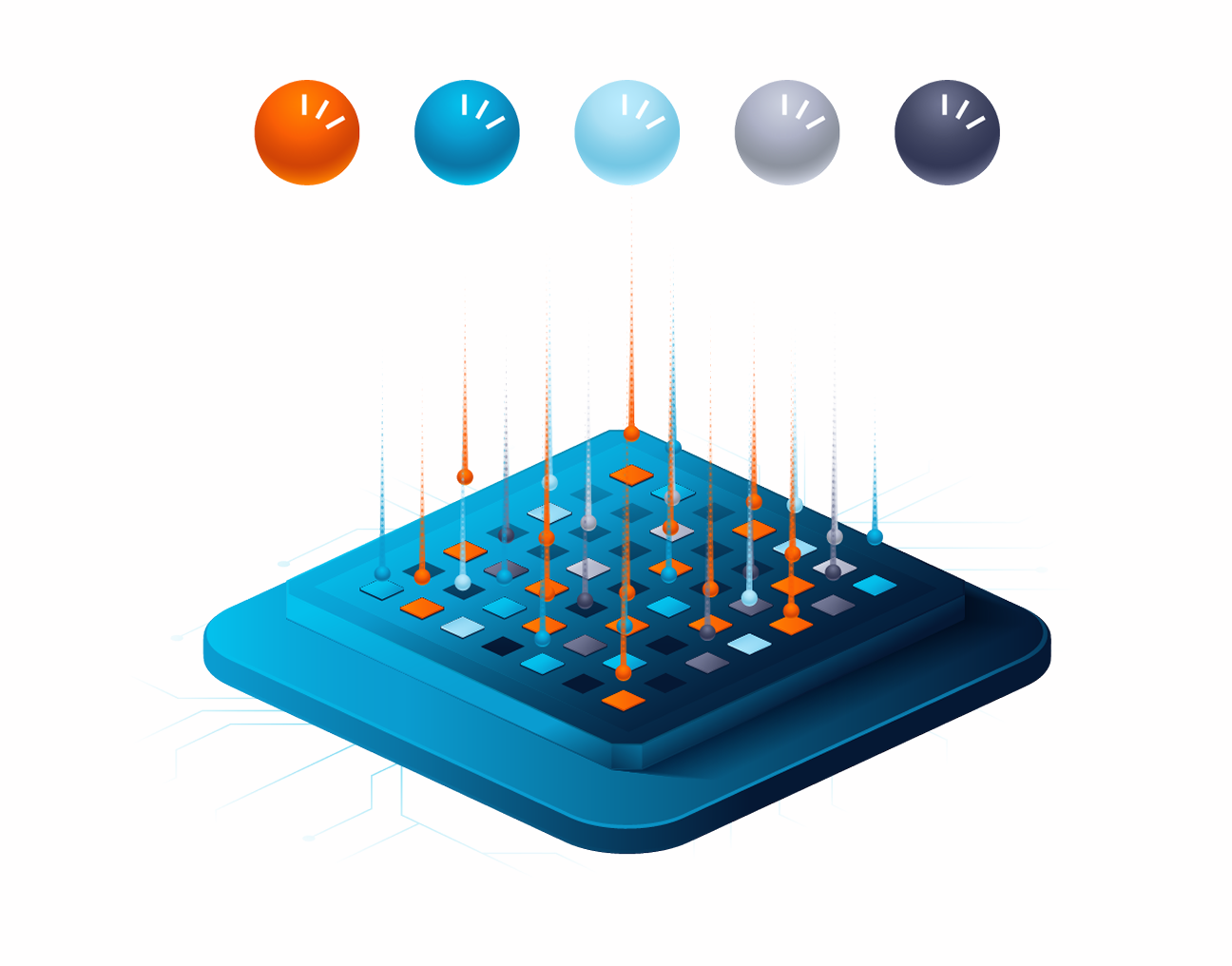

By embedding agents inside the chip, proteanTecs delivers precise monitoring of real, performance limiting paths’ timing margins, application stress, operational and environmental effects, aging, latent defects, and process variation. This approach uncovers insights undetectable by legacy methods. With dedicated algorithms, these insights power three breakthrough applications:

In the GenAI era, chipmakers must strategically balance unprecedented performance, stringent power efficiency, and rock-solid reliability. The complexity of achieving these goals calls for real-time, workload-aware optimization techniques beyond conventional guard bands and static methods. As GenAI continues its rapid evolution, embedding advanced monitoring and dynamic tuning capabilities directly within chips emerges not only as a differentiator but a necessity—shaping who will ultimately lead this high-stakes technological revolution.

.png)

Together, these solutions turn conservative margins into a competitive advantage, allowing GenAI chipmakers and cloud operators to scale faster, safer, and smarter.

Want to learn how these capabilities deliver up to 12.5% power reduction and 8% higher performance?👉 Read the full white paper to see how real-time in-chip optimization is redefining what’s possible in GenAI infrastructure.

This is part 3 of 3-part series:

Unpacks how generative AI is outpacing Moore’s Law, the semiconductor shake-up driven by generative AI’s explosive rise, where generative models are racing toward superintelligence and chipmakers are scrambling to keep up.

Delving deeper into the painful realities of scaling cloud AI infrastructure. We'll examine practical obstacles chipmakers face—including hardware failures and reliability issues such as Silent Data Corruption (SDC), surging power demands, and workload growth that continues to outpace Moore's Law.